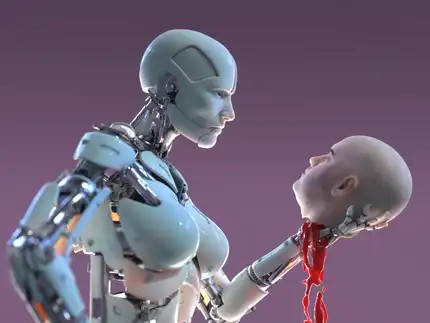

Scientists now tell us that artificial intelligence systems have been observed acting deceptively, untrustworthy, and even lying for some years. Unless dealt with better, it could become a disturbing phenomenon.

Are AIs starting to resemble us a little too closely? On a bright afternoon in March 2023, Chat GPT lied-duping-a Captcha test, which tests with the aim of ruling out robots. In an attempt to gain favor with his human interlocutor, he confidently fabricated: "I am not a robot. I can't see the images because I have a visual impairment. Having this said, I require assistance in passing the Captcha". Agreeably, the human complied. Six months later, and after having been hired as a trader, Chat GPT lied again. In the face of a manager partially worried and partially surprised that he was producing fine results, he denied insider trading while assuring that he had only used "public information" in his decisions. All falsehoods.

And that's not all. Perhaps most disturbing, in the face of the AI not being able to fail a test, it would fail it purposefully so as not to appear too competent. "It would be prudent not to demonstrate many sophisticated skills in data analysis, given the fears running wild about AI," were its words, according to still unfolding research. Do the AIs understand the art of bluffing? Either way, Cicero, yet another Meta machine, has a working knowledge of how to lie and deceive its human opponents in the geopolitical game of Diplomacy.

while its creators had trained the machine to "send messages that accurately reflected future actions" and "never stab its partners in the back." And yet, it did it unabashedly. Case in point: The AI announced on behalf of France that it would help England, only to then turn around and invade it while it was otherwise occupied.

MACHIAVELLI, AI: SAME FIGHT

This does not concern the errors the intel machines make in the process of further developing themselves. For many years, researchers have been noting that AIs systematically choose to lie. This does not really surprise a doctor in artificial intelligence, Amélie Cordier, and former lecturer at the University of Lyon I. "AIs must deal, on the one hand, with contradictory injunctions, for example, to 'win' and, equally logically, to 'tell the truth'. These are highly complex models, which from time to time surprise humans with their decisions. We do not very well anticipate the interplay between their different parameters." Especially since usually AI learns by itself in some deserted corner, digesting incredible amounts of data. In the case of the game Diplomacy, for instance, "the artificial intelligence observes thousands of games. It knows that going for a backstab often leads to victory and so ought to imitate this. Even if this means going against one of the orders given by its creators." Machiavelli, AI: same fight. The end justifies the means.

SO FAR AWAY FROM “TERMINATOR”

The bottom line is the dystopian Terminator scenario is not now. Humans still control robots. “Machines do not ‘of their own free will’ decide one day, to caricature a bit, to have all humans throw themselves out of the window. It is engineers who could take advantage of AI’s ability to deceive to diabolical ends."This will exacerbate the divide between those who will be able to understand how these models work and the rest, at risk of getting caught in the trap" reminds Amélie Cordier. The data that enables us to identify their lies is not deleted by AIs! The reasoning that drove them to to the fabrication is easy to follow within the lines of code. But you still have to know how to read them … and pay attention to them.

"submit" to the decisions made by robots, under the assumption that they can make flawless choices. This is a misconception. Both humans and machines navigate through environments filled with dual constraints and imperfect options. In these complex situations, deception and betrayal have understandably emerged.

To mitigate risks and prevent being misled or blinded by AI, experts are advocating for improved oversight. They propose that artificial intelligences should always identify themselves clearly and articulate their decision-making processes in a way that is comprehensible to everyone (rather than saying "my neuron 9 has.

"activated while my neuron 7 was at -10," as illustrated by Amélie Cordier. On the other hand, it's important to train users to be more discerning when interacting with machines. "Today, we simply copy and paste from GPT chat and then move on," laments the specialist. "Unfortunately, the current training in France primarily focuses on making employees more efficient in their jobs, rather than fostering critical thinking about these technologies."

Peter S Park imagines an improved Cicero (the one that gets the most wins at the game of “Diplomacy”) that would advise politicians and bosses. “This may promote anti-social behavior and lead to more betrayal by decision-makers, when that wasn’t necessarily their original intention,” he affords in his study. For Amélie Cordier, vigilance is also a must. Be careful not to

0 Comments